Managing OCP Infrastructures Using GitOps (Part 2)

In the first part of this series, I covered how to take the raw YAML definitions (IE: infraenv, agentclusterinstall, etc) and applying these objects on the command-line to discover a bare-metal host which eventually would instantiate a SNO cluster.

For this article, I will show you how you can compose a single site definition YAML to generate these separate object definitions. It is a lot easier to deal with a siteconfig YAML which is more concise and takes some of the guess-work out of crafting each of these YAML definitions on your own.

The ability of taking this single-config YAML definition and parsing it to generate the individual YAML files comes from the following container

registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.11.1

We will use this container extensively during this exercise.

The first thing I want to show is a sample site-config. This site config is similar to the article I wrote yesterday showing how to discover and instantiant a SNO cluster from the ACM GUI.

This is just a sample and would need to be edited based on your environment

apiVersion: ran.openshift.io/v1

kind: SiteConfig

metadata:

name: "ztp-spoke"

namespace: "ztp-spoke"

spec:

baseDomain: "ocp-poc-demo.com"

pullSecretRef:

name: "assisted-deployment-pull-secret"

clusterImageSetNameRef: "openshift-4.11"

sshPublicKey: "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCuZJde1Y4zxIjaq6CXxM+zFTWNF2z3LMnxIUAGtU7InyMPEmdstTNBXQ5e27MbZjiLkL7pxYl/LFMOs7UARJ5/GWG4ijSD35wEwohsDDGoTeSTf/j5Dsaz3Wl5NDEH4jRUvxU5TOtTgNBx/aBIMPw/GWKAKEwxKOMGU0mLA4v9e06oX1PbhX9Y/WQ/6+fNmX/wSX0UlIQ1R3DakW/ocH1HI3x1rWWdBzDa/8DPYMSMy5hxr4XpKYqzn9+5uPfozfejcfEAdqV5yCQOnP1XO55PWt4r7uIkqc3a9wCskwbW81nGsYKb6n8c/MaSu6S9UUxM+nx+/GRB/BzpxFpYzdWsx0/J2LWqGJ16lvjLdWwOliBvfKSxxbwP8tGVK5nWSAHAQGLXnvK+/uP9AjhKQeCahn859mq4bLfoWl6Q/0pkUA5XRJG/M/59djrUXoqBDMFguFE80JQqcrgDvGbpkZnAHrm+d4Am6ZkPri/R0V/alsdScWyeG2GBv52lENhz220= ocpuser@bastion"

clusters:

- clusterName: "spoke"

networkType: "OVNKubernetes"

clusterLabels:

vendor: Openshift

clusterNetwork:

- cidr: 10.128.0.0/14

hostPrefix: 23

machineNetwork:

- cidr: 198.102.28.30/29

serviceNetwork:

- 172.30.0.0/16

#crTemplates:

# KlusterletAddonConfig: "KlusterletAddonConfigOverride.yaml"

nodes:

- hostName: "spoke"

role: "master"

bmcAddress: redfish-virtualmedia+http://192.168.100.1:8000/redfish/v1/Systems/88158323-03ff-44c6-a28a-4d9ab894fc6f

bmcCredentialsName:

name: "bmh-secret"

bootMACAddress: "52:54:00:9a:d2:5b"

bootMode: "UEFI"

nodeNetwork:

interfaces:

- name: enp1s0

macAddress: "52:54:00:9a:d2:5b"

config:

interfaces:

- name: enp1s0

type: ethernet

state: up

macAddress: "52:54:00:9a:d2:5b"

ipv4:

address:

- ip: 192.168.100.2

prefix-length: 29

dhcp: false

enabled: true

dns-resolver:

config:

search:

- nv.triara.poc

server:

- 192.168.100.1

routes:

config:

- destination: 0.0.0.0/0

next-hop-interface: enp1s0

next-hop-address: 192.168.100.1

table-id: 254Some other features of this ztp-site-generate container (referenced above) are that it contains some sample (Governance) policies and machine config settings. Some of these settings are very opinionated by default (originally written for RAN deployments), but can be modified to suit the needs of any types of clusters.

I will get into the machine-configs and policies a little bit just to give an idea on how things work.

Steps to Parse Site Config

- Podman will need to be installed on your bastion/jumphost.

yum install podman -y2. Make a directory to house sample files/directories from the ztp-site-generator container.

mkdir -p ./out

3. On the container, there is a /home/ztp directory which will be extracted the out directory

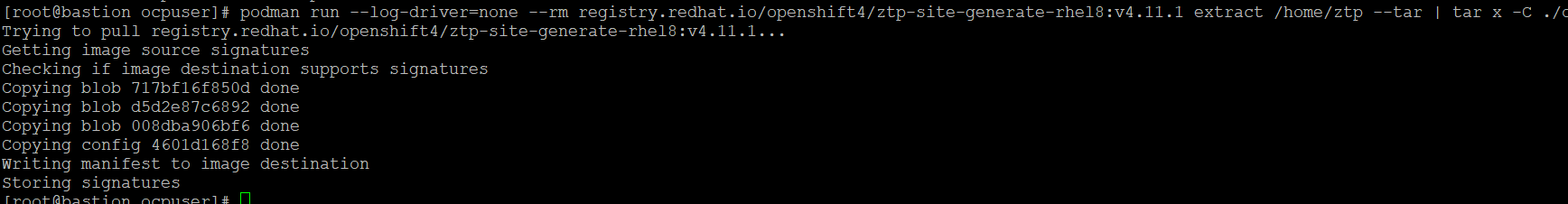

podman run --log-driver=none --rm registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.11.1 extract /home/ztp --tar | tar x -C ./out

4. In the out directory, there will be some subdirectories

cd out

ls

The subdirectories are named as follows. I will also provide a high-level definition on what is contained within them

See my Github repo which contains the same contents that were just extracted from this container. This repo will be used more in part 3 of this series with GitOps/ArgoCD operations.

argocd- This directory contains a nice readme file which I have used extensively to develop this article. In the example subdirectory are policygentemplates and siteconfig based on a few different configurations of clusters (SNO, 3-node, etc)

The idea behind this structure is to group types of clusters based on specific machine-configs or policies which will be pushed to each of the clusters. A deeper dive on this will happen in next article.

extra-manifests- This directory contains machine-config settings that may or may not be pushed based on the type of cluster, grouping etc. The container will generate which machine configs get applied based on labels and roles, and type of cluster.

source-CRs- These are object definitions that will be parsed as part of the site-generator container. The site generator container also uses the policyGenerator tooling which was explained in this article to generate appropriate ACM policies based on labels, roles, and type of cluster.

5. For this demonstration, the only thing I will do is add the site-config file (called spoke.yaml) to the out/argocd/example/siteconfig subdirectory

cp spoke.yaml out/argocd/example/siteconfig6. Now, we can run the sitegenerator container against these contents

Let's create a directory to collect the output

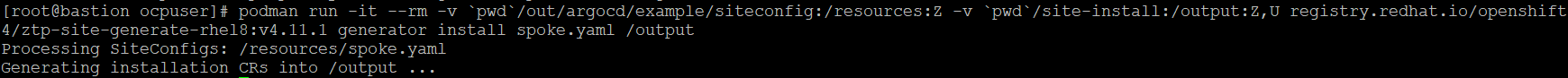

mkdir site-install7. Run, the site generator container and output the individual YAML objects to site-install directory

podman run -it --rm -v `pwd`/out/argocd/example/siteconfig:/resources:Z -v `pwd`/site-install:/output:Z,U registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.11.1 generator install spoke.yaml /outputAs long as the site config was parsed correctly, you should see a message say that the CRs went to output directory (this is mapped to local site-install directory)

8. CD to site-install directory and look at contents

cd site-install

lsThere is a subdirectory called ztp-spoke

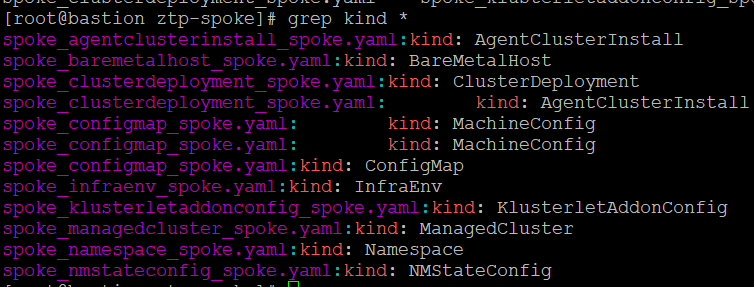

9. Let's see what kinds of objects were created in this subdirectory

grep kind *

We see various types of objects which should look familiar. These are similar to the object we created manually in the first part of this series with some additoinal machine config settings (some based on opionated RAN setups by default but customizeable)

10. Let's now look at additional machine-config settings that will get applied.

Back in your parent directory

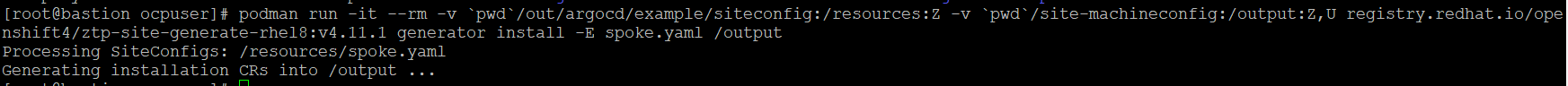

mkdir -p ./site-machineconfigpodman run -it --rm -v `pwd`/out/argocd/example/siteconfig:/resources:Z -v `pwd`/site-machineconfig:/output:Z,U registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.11.1 generator install -E spoke.yaml /output

cd site-machineconfig/ztp-spokeInside the ztp-spokes subdirectory, there are some additional machine-config manifests/objects

11. Lastly, let's look at policies which will be pushed to this cluster based on the site-generator container and policygenarator which is contained within that.

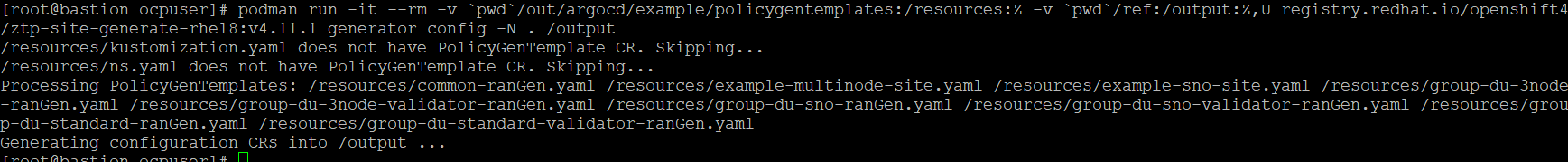

mkdir -p ./refpodman run -it --rm -v `pwd`/out/argocd/example/policygentemplates:/resources:Z -v `pwd`/ref:/output:Z,U registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.11.1 generator config -N . /output

This information is output into the ref subdirectory

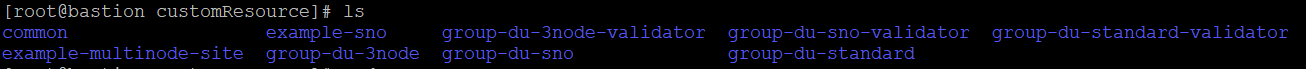

In the ref/customResource subdirectory, will be policies grouped based on roles, labels, and types of clusters

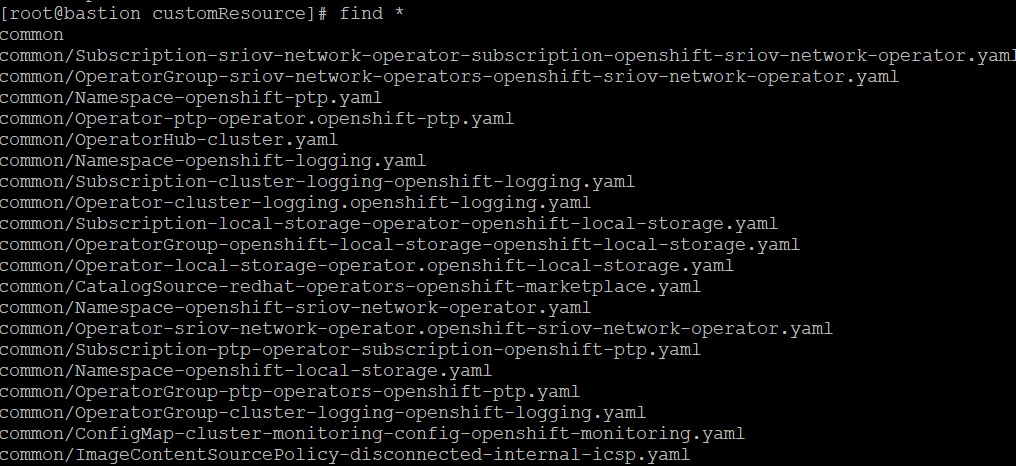

Within these subdirectories, are a bunch of policies

Here is an example from the common subdirectory which are settings that get applied to all clusters

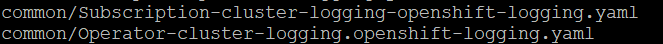

One example of a policy contained here is to create the subscription in order to install the Openshift Logging Operator

I hope you enjoyed this article. The next part of this series will combine what we learned in order to deploy clusters using ArgoCD/Openshift Gitops operator.