Query OCP API from Pod

I've been sort of busy with projects lately but here is a quick article to keep things going.

Recently, I was asked for a way to query the Kubernetes/OCP API to get some information. The requirements were to have a pod (based off of the minimal ubi8 container) that runs once and exits to query the Kubernetes/OCP API to get the current number of worker nodes in the cluster. This is by no means the only way to get this information but it highlights different constructs inside of OCP/Kubernetes such as:

Service Accounts

Cluster-Roles

Role Bindings

Config-Maps

Deployments

So in this article, you will get a primer on how all of these pieces fit together to accomplish this task.

Here is a link to the GitHub repo that shows this code

The first thing we do is create a namespace/project to put this project in

oc new-project query-apiService Account

- Once the namespace/project is created, it is time to create the service account that will be used by the deployment to grant the appropriate permissions.

apiVersion: v1

kind: ServiceAccount

metadata:

name: query-api

namespace: query-apioc apply -f sa.yamlCluster-Role

1. The cluster role is created which will give the query-api service account the appropriate rights to query the API. It is best to limit to only what is needed.

2. For this particular use-case (getting the number of worker nodes), I am using the MachineConfigPool object inside of the API.

The query that will ultimately be run on the pod is as follows:

curl https://kubernetes.default.svc/apis/machineconfiguration.openshift.io/v1/machineconfigpools/worker

The serviceaccount token which is stored on the pod in /var/run/secrets/kubernetes.io/serviceaccount/token is used (to authenticate as a user to the API) as well as the ca.crt located in

/var/run/secrets/kubernetes.io/serviceaccount/ca.crt (this allows curl to trust the CA that issued the Kubernetes API cert.

3. To get back to the task at hand, the cluster-role will need to be configured in a way to only allow access to certain api groups (machineconfiguration.openshift.io in this case) and the actions (verbs) that can be used will be limited to list and get.

aggregationRule:

clusterRoleSelectors:

- matchLabels:

rbac.authorization.k8s.io/aggregate-to-cluster-reader: "true"

- matchLabels:

rbac.authorization.k8s.io/aggregate-to-view: "true"

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

name: query-api

rules:

- apiGroups:

- machineconfiguration.openshift.io

resources:

- machineconfigpools

verbs:

- get

- listoc apply -f cluster-role.yamlRole Binding

The role-binding will link the cluster-role that was created called query-api to the service account that pod will run as (query-api)

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: query-api

namespace: query-api

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: query-api

subjects:

- kind: ServiceAccount

name: query-api

namespace: query-apiConfigMap

The configmap in this case is the shell script that will be run on the pod. The configmap will be mounted as a volume on the pod and will be located in /tmp/getnumberofworkers

apiVersion: v1

data:

getnumberofworkers: |

APISERVER=https://kubernetes.default.svc

SERVICEACCOUNT=/var/run/secrets/kubernetes.io/serviceaccount

NAMESPACE=$(cat ${SERVICEACCOUNT}/namespace)

TOKEN=$(cat ${SERVICEACCOUNT}/token)

CACERT=${SERVICEACCOUNT}/ca.crt

curl -s --cacert ${CACERT} --header "Authorization: Bearer ${TOKEN}" -X GET ${APISERVER}/apis/machineconfiguration.openshift.io/v1/machineconfigpools/worker|grep machineCount

kind: ConfigMap

metadata:

name: getnumberofworkers

namespace: query-apiDeployment

The deployment will instantiate a pod that runs the shell script one-time and exits. The result of the curl command that shows the number of worker nodes will be in the standard-out (stdout) of that pod.

kind: Deployment

apiVersion: apps/v1

metadata:

name: query-api

spec:

replicas: 1

selector:

matchLabels:

app: query-api

template:

metadata:

creationTimestamp: null

labels:

app: query-api

deploymentconfig: query-api

annotations:

spec:

containers:

- name: query-api

image: registry.access.redhat.com/ubi8/ubi-minimal:8.8-1014

volumeMounts:

- name: getnumberofworkers

mountPath: /tmp/getnumberofworkers

subPath: getnumberofworkers

command:

- /bin/sh

- -c

- /tmp/getnumberofworkers

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

imagePullPolicy: Always

volumes:

- name: getnumberofworkers

configMap:

name: getnumberofworkers

defaultMode: 0755

restartPolicy: Always

terminationGracePeriodSeconds: 30

dnsPolicy: ClusterFirst

securityContext: {}

schedulerName: default-scheduler

imagePullSecrets: []

serviceAccountName: query-api

serviceAccount: query-api

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

revisionHistoryLimit: 10

progressDeadlineSeconds: 600

paused: falseoc apply -f query-api-deployment.yamlSummary

Some things to note from this deployment/pod definition that will tie this altogether

A. Service Account called query-api was created and used in the deployment/pod definition.

B. A custom cluster-role was created which only provided access to machineconfiguration.openshift.io API group. Under this API group, a resource called machineconfigpools was queried. This action is allowed based on the verbs list and get being added to this role.

C. This query-api service account was linked to the cluster-role

D. A configmap that is a shell script which sets some environment variables such as location of Kubernetes API, ServiceAccount token, and ca.crt uses curl to query the number of workers from the API.

E. Lastly, the deployment mounts the configmap as a volume/file called /tmp/getnumberofworkers. The pod is based off of the ubi8 minimal image. This pod runs one-time and exits.

F. The output of this script can be viewed in the logs of the pod

Final Product

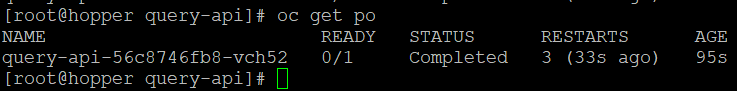

## Shows the pod is in Completed status

oc get po

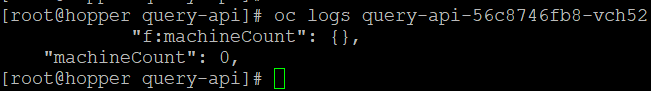

#Shows the output. Disregard the zero here. This is because my cluster is a # SNO-based cluster

# oc logs <nameofyourpod>

oc logs query-api-56c8746fb8-vch52

This could be cleaned up some more if I added something like "jq" to the image but this is enough to get started.